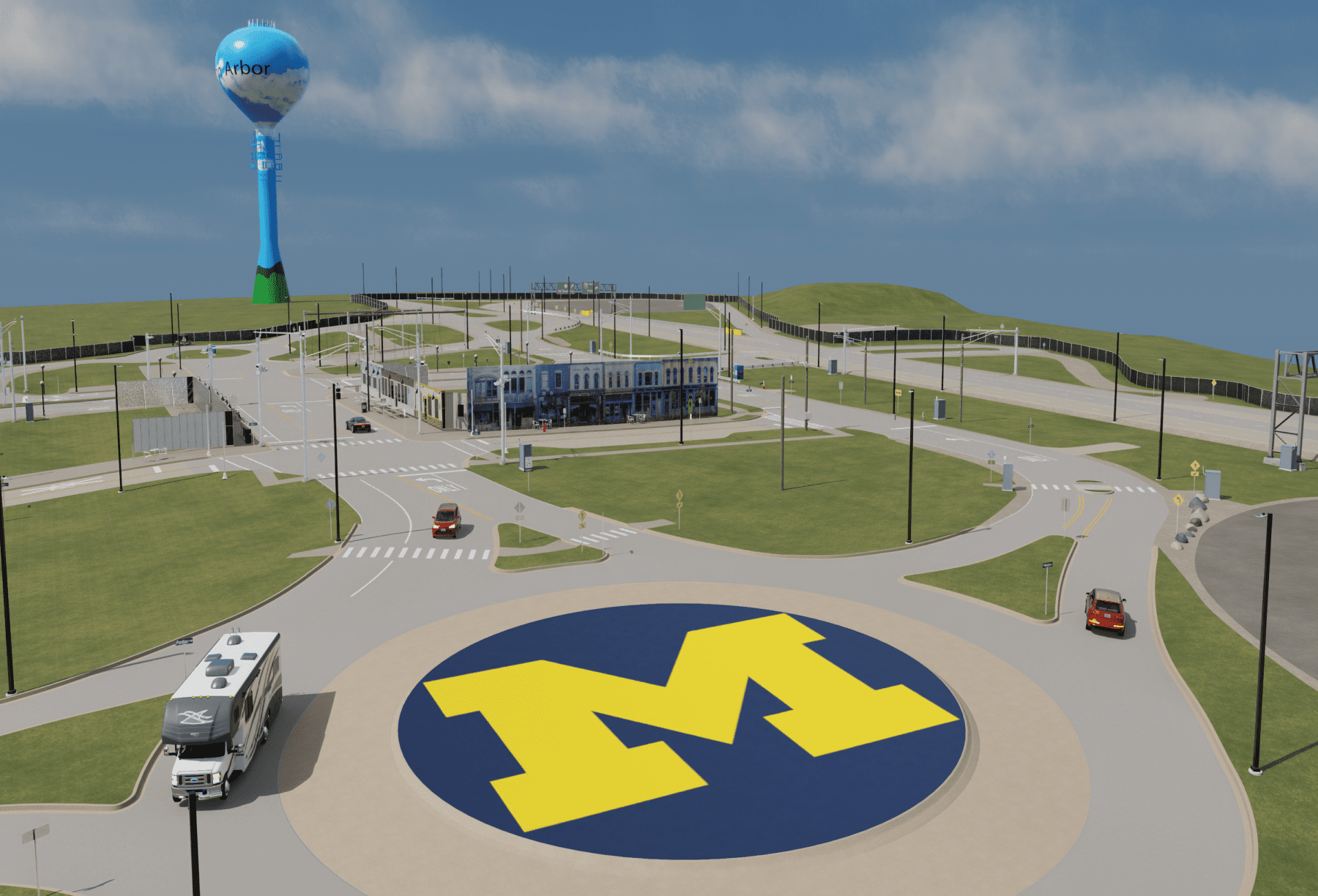

Mcity Test Facility digital twin enhanced by NVIDIA Omniverse

Mcity at the University of Michigan is tapping into the high fidelity of NVIDIA Omniverse to enhance the recently-released digital twin of its Mcity Test Facility for autonomous vehicles (AV) and technologies to close the divide between simulated and real-world testing conditions, also known as the “sim-to-real gap.”

The sim-to-real gap describes the sometimes not so subtle differences between a simulated environment and its real-life counterpart. These variances have in the past been cited by engineers as a reason simulation is not robust enough for reliable AV testing.

Mcity is now integrating the NVIDIA Omniverse Blueprint for Autonomous Vehicle Simulation into its digital twin to enable physics-based modeling of camera, lidar, radar, and ultrasonic sensor data.

Mcity’s physical test sensor data and NVIDIA’s high-fidelity sensor simulation data, rendered via the NVIDIA Sensor RTX APIs, will be released open-source to enable researchers to fully investigate and replicate Mcity’s results.

Using NVIDIA-supported cameras and lidars, Mcity is collecting real video and real lidar data of the Mcity Test Facility so it will be able to validate the rendered sensor data.

The idea is to make it difficult, if not impossible, to tell which version is simulated.

Generating content for simulation purposes is fundamentally different from most 3D modeling, which is primarily geared toward gaming. Game developers focus on optimizing performance across a wide range of hardware configurations. Mcity’s priority is not maximizing compatibility or speed, but ensuring its digital twin generates realistic data. This often means breaking some game development rules of thumb, such as avoiding premature asset optimization, since accuracy and detail take precedence over real-time performance.

Safe place to do unsafe things

Traditional AV road testing lacks the scalability needed to prove safety across a wide variety of rare or unusual situations, such as adverse weather conditions, sudden pedestrian crossings, or unpredictable driver behavior. It is particularly difficult to test dangerous scenarios on public roads without significant risk.

Physical proving grounds and digital twins like Mcity’s are safe places to do unsafe things.

Mcity and NVIDIA are working with MITRE, a government-sponsored nonprofit research organization, to provide both physical and digital resources to validate AVs and offer a safe, universally accessible solution that addresses the challenges of verifying autonomy.

By combining Mcity’s and NVIDIA’s capabilities with MITRE’s Digital Proving Ground reporting framework, developers will be able to perform exhaustive testing in a simulated world to safely validate AVs before real-world deployment.

As developers, automakers, and regulators continue to collaborate, the industry is moving closer to a future where AVs can operate safely and at scale.

The establishment of a repeatable testbed for validating safety — across real and simulated environments — will be critical to gaining public trust and regulatory approval, bringing the promise of AVs closer to reality.

Before and After

The images below show the improved resolution possible with NVIDIA Omniverse. The left image in each pair is a screenshot that was pulled from the original Mcity Digital Twin. The right image is the same asset rendered using NVIDIA Sensor RTX APIs.

Brick Building Facade Inside Mcity Test Facility

Parking Meter Inside Mcity Test Facility